DeepCut: Unsupervised Segmentation using Graph Neural Networks Clustering

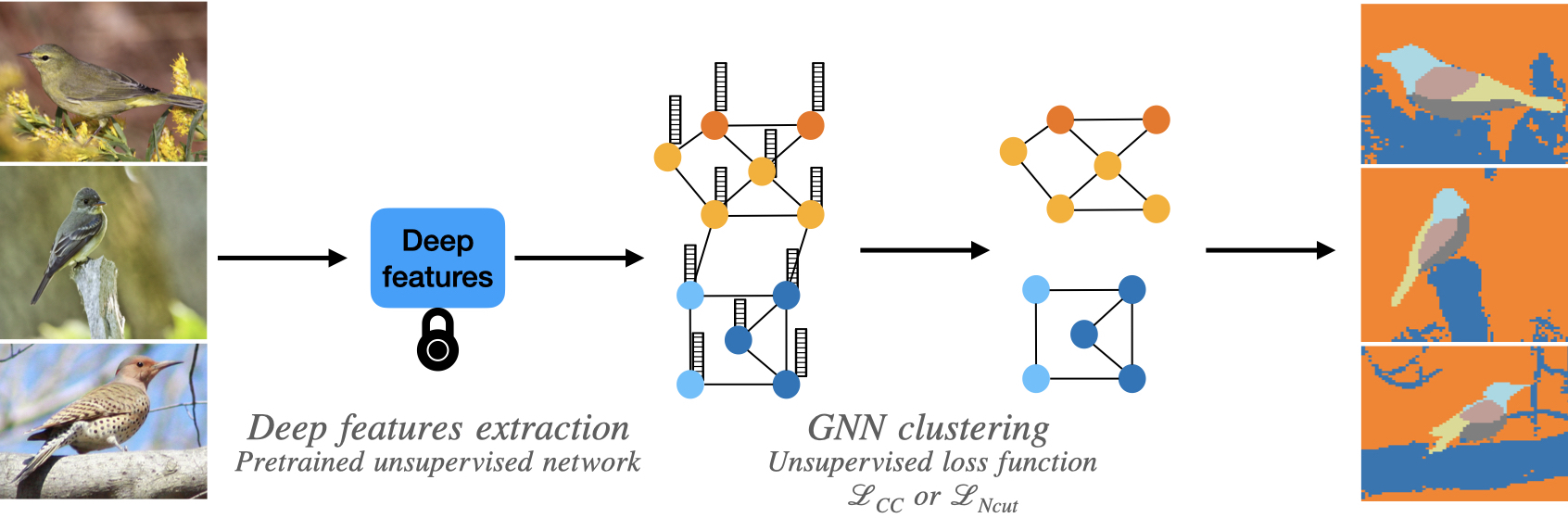

We use graph neural networks with unsupervised losses from classical graph theory to solve various image segmentation tasks. We use deep features from a pre-trained vision transformer (ViT) as input to our network, thus avoiding expensive training associated with end-to-end methods.

Abstract

Image segmentation is a fundamental task in computer vision. Data annotation for training supervised methods can be labor-intensive, motivating unsupervised methods. Current approaches often rely on extracting deep features from pre-trained networks to construct a graph, and classical clustering methods like k-means and normalized-cuts are then applied as a post-processing step. However, this approach reduces the high-dimensional information encoded in the features to pair-wise scalar affinities. To address this limitation, this study introduces a lightweight Graph Neural Network (GNN) to replace classical clustering methods while optimizing for the same clustering objective function. Unlike existing methods, our GNN takes both the pair-wise affinities between local image features and the raw features as input. This direct connection between the raw features and the clustering objective enables us to implicitly perform classification of the clusters between different graphs, resulting in part semantic segmentation without the need for additional post-processing steps. We demonstrate how classical clustering objectives can be formulated as self-supervised loss functions for training an image segmentation GNN. Furthermore, we employ the Correlation-Clustering (CC) objective to perform clustering without defining the number of clusters, allowing for k-less clustering. We apply the proposed method for object localization, segmentation, and semantic part segmentation tasks, surpassing state-of-the-art performance on multiple benchmarks.

Examples

All examples were produced using DeepCut without post-processing steps such as CRF, CAD, bilateral solver, etc.

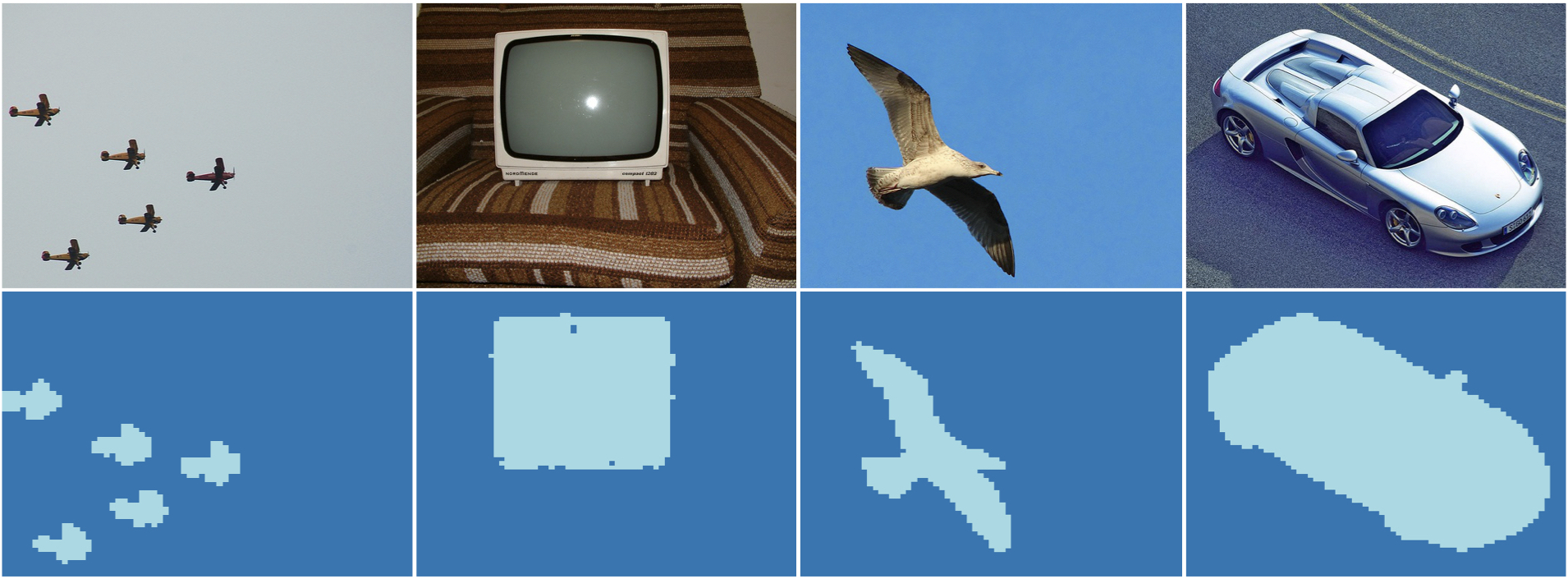

Single object segmentation

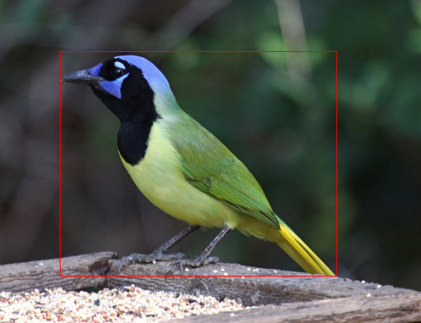

We apply clustering to the image data into two groups with different semantic meanings. We chose the largest connect component to be our segmented object. For object detection, we draw a bounding box around the selected object.

The original image with bounding box created using DeepCut (left) and single object segmentation using DeepCut (right).

Pairs of images (top) and its foreground-background segmentation (bottom). In order to achieve single object segmentation for cases such as the bottom left image, we chose one cluster (the largest connected component in the graph).

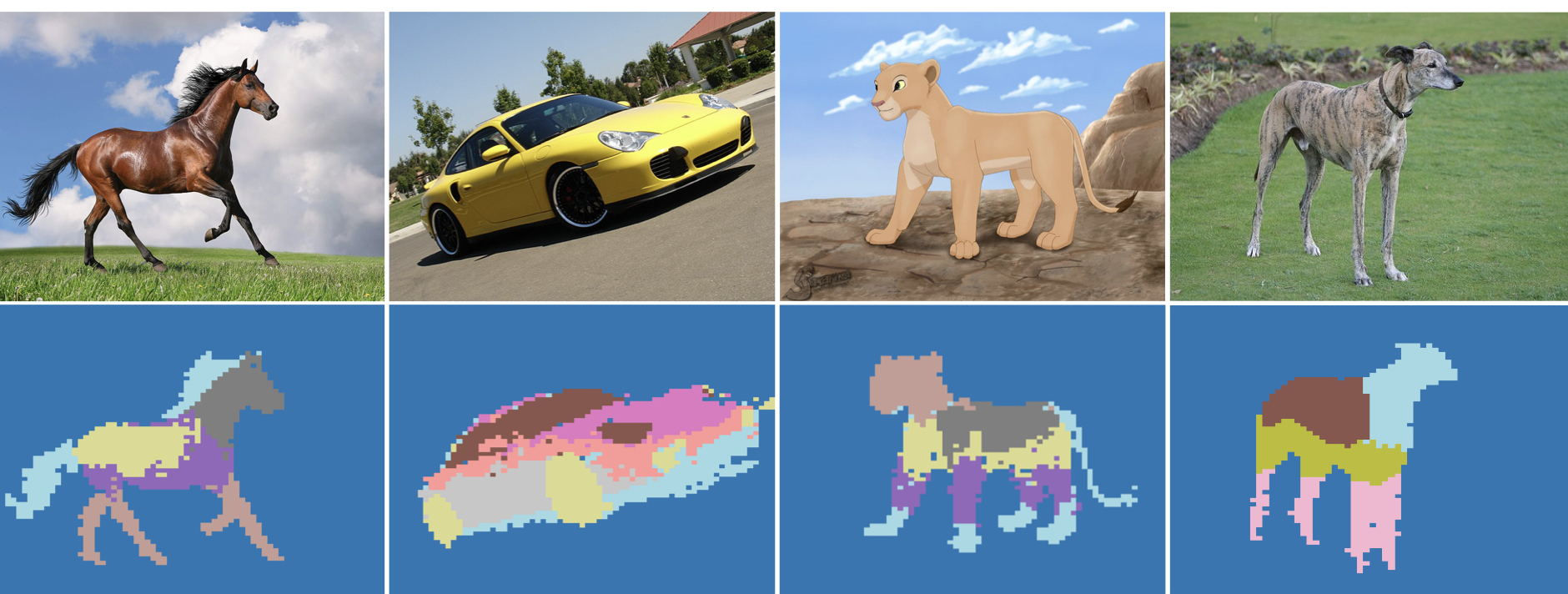

Semantic part segmentation

Semantic part segmentation across all three images using DeepCut with 2-stage segmentation as described in the paper. Top: original image, middle: foreground part segmentation, bottom: foreground and background part segmentation.

Examples of semantic part segmentation. Note that here the segmentation is preformed on each image separately.

Video semantic part segmentation

Video of semantic part segmentation, note the implicit tracking of the part segmentation.

acknowledgments

Special thanks to the SAMPL AI team: Dudi Radosezki, Ariel Keslassy, Lital Binyamin, Chen Solomon, Yishai Schlesinger, Michal Katirai and Hana Hasan.